This is an old revision of the document!

Stanford Medicine Program for Artificial Intelligence in Healthcare

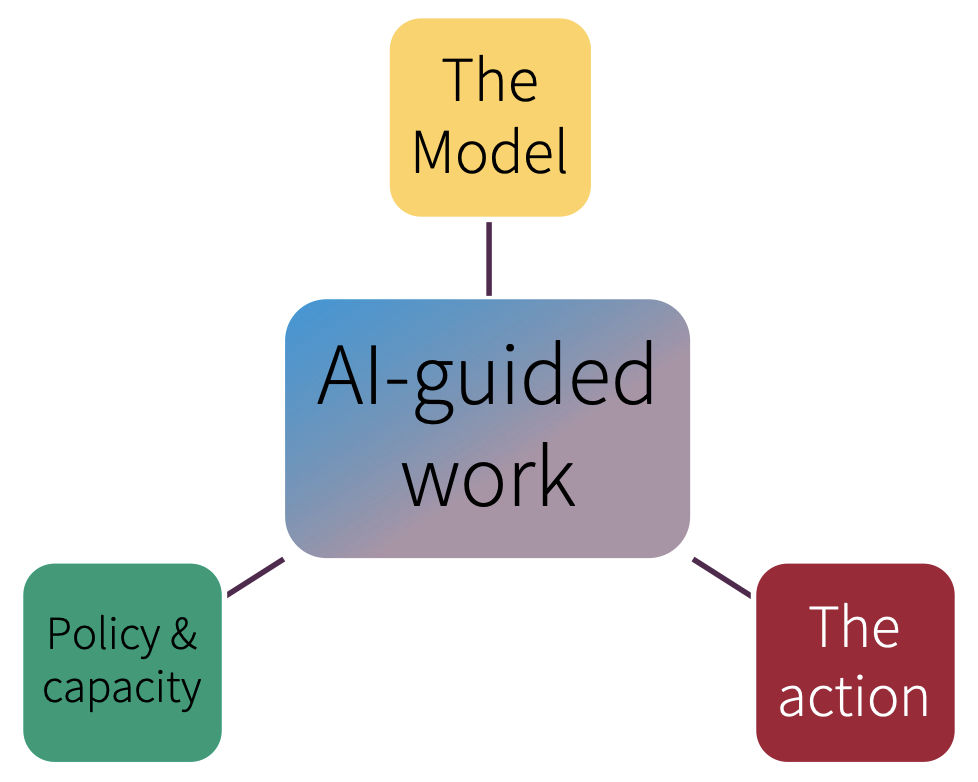

In healthcare, predictive models play a role not unlike that of blood tests, X-rays, or MRIs: They influence decisions about whether an intervention is appropriate. Whether a model is usefulness depends on the interplay between the model's output, the intervention it triggers, and the intervention’s benefits and harms. We are working on a set of efforts collectively referred to as the Stanford Medicine Program for Artificial Intelligence in Healthcare, with the mission of bringing AI technologies to the clinic, safely, cost-effectively and ethically.

Our research stemmed from the effort in improving palliative care using machine learning. Ensuring that machine learning models are clinically useful requires estimating the hidden deployment cost of predictive models as well as quantifying the impact of work capacity constraints on achievable benefit, estimating individualized utility, and learning optimal decision thresholds. Pre-empting ethical challenges often requires keeping humans in the loop and focus on examining the consequences of model-guided decision making in the presence of clinical care guidelines.

Russ Altman and Nigam Shah taking an in-depth look at the growing influence of “data-driven medicine.”

Keeping the Human in the Loop for Equitable and Fair Use of ML in Healthcare, at AIMiE 2018

Building a Machine Learning Healthcare System, at XLDB, April 30 2018