This is an old revision of the document!

Table of Contents

Responsible AI in Healthcare

Making Machine Learning Models Clinically Useful

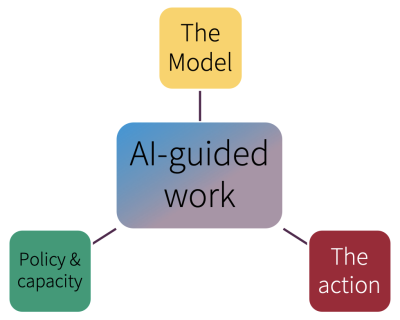

Whether a classifier or prediction model is usefulness in guiding care depends on the interplay between the model's output, the intervention it triggers, and the intervention’s benefits and harms.

We study this interplay for bringing AI to the clinic, safely, cost-effectively and ethically and to inform the work of the Data Science Team at Stanford Healthcare in performing assessments to ensure that we are creating Fair, Useful, Reliable Models (FURM). Blog posts at HAI summarize our work in easily accessible manner. Our research stemmed from the effort in improving palliative care using machine learning. Ensuring that machine learning models are clinically useful requires estimating the hidden deployment cost of predictive models as well as quantifying the impact of work capacity constraints on achievable benefit, estimating individualized utility, and learning optimal decision thresholds. Pre-empting ethical challenges often requires keeping humans in the loop and focus on examining the consequences of model-guided decision making in the presence of clinical care guidelines.

Creation and Adoption of Foundation Models in Medicine

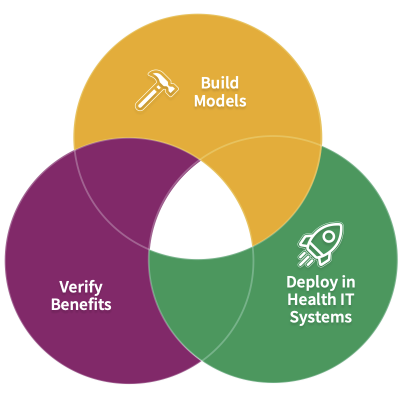

Given the high interest in using large language models (LLMs) in medicine, the creation and use of LLMs in medicine needs to be actively shaped by provisioning relevant training data, specifying the desired benefits, and evaluating the benefits via testing in real-world deployments.

We conduct research to assess whether commercial language models support real-world needs or are able to follow medical instructions that clinicians would expect them to follow. We build clinical foundation models such as CLMBR, MOTOR and verify their benefits such as robustness over time, populations and sites. In addition we make available de-identified datasets such as EHRSHOT for few-shot evaluation of foundation models.